# atmos

> Universal tool for DevOps and Cloud Automation

This file contains all documentation content in a single document following the llmstxt.org standard.

## Best Practices

import DocCardList from '@theme/DocCardList'

> Physics is the law, everything else is a recommendation.

> Anyone can break laws created by people, but I have yet to see anyone break the laws of physics.

> — **Elon Musk**

Learn how to best leverage Stacks and Components together with Atmos.

---

## Component Best Practices

import Intro from '@site/src/components/Intro'

Here are some essential best practices to follow when designing architectures using infrastructure as code (IaC), focusing on optimizing

component design, reusability, and lifecycle management. These guidelines are designed to help developers and operators build efficient,

scalable, and reliable systems, ensuring a smooth and effective infrastructure management process.

Also, be sure to review the [Terraform Best Practices](/best-practices/terraform) for additional guidance on using Terraform with Atmos.

> Physics is the law, everything else is a recommendation.

> Anyone can break laws created by people, but I have yet to see anyone break the laws of physics.

> — **Elon Musk**

## Keep Your Components Small to Reduce the Blast Radius of Changes

Focus on creating single purpose components that small, reusable components that adhere to the UNIX philosophy by doing one thing well.

This strategy leads to simpler updates, more straightforward troubleshooting, quicker plan/apply cycles, and a

clearer separation of responsibilities. Best of all, your state remains small and complexity remains manageable.

Anti-patterns to avoid include:

- Combining VPCs with databases in the same component

- Defining every dependency needed by an application in a single component (provided there's no shared lifecycle)

## Split Components By Lifecycle

To keep your component small, consider breaking them apart by their Software Development Lifecycle (SDLC).

Things that always change together, go together. Things that seldom change together, should be managed separately.

Keep the coupling loose, and use remote state for cohesion.

For instance, a VPC, which is rarely destroyed, should be managed separately from more dynamic resources like clusters

or databases that may frequently scale or undergo updates.

## Make Them Opinionated, But Not Too Opinionated

Ensure components are generalized to prevent the proliferation of similar components, thereby promoting easier testing,

reuse, and maintenance.

:::important Don't Treat Components like Child Modules

Don't force users to use generic components if that will radically complicate the configuration.

The goal is to make 80% of your infrastructure highly reusable with generic single purpose components.

The remaining 20% might need to be specialized for your use case, and that's okay.

:::

## Avoid Single Resource Components

If you find yourself writing a component that is so small, it manages only a single resource e.g. (an IAM Policy),

consider if it should be part of a larger component.

:::tip Stack Configurations are Not a Replacement for Terraform

The biggest risk for newcomers to Atmos is to over architect components into extremely DRY single-purpose components.

Stack configurations in YAML should not just be a proxy for terraform resources.

Use terraform for its strengths, compliment it with YAML when it makes sense for very straight forward configuration.

:::

## Use Parameterization, But Avoid Over-Parameterization

Good parameterization ensures components are reusable, but components become difficult to test and document with too many parameters.

Often time, child modules might accept more parameters than the root module. You can always add more parameters to the root module

as needed, but it's hard to remove them once they are there.

## Avoid Creating Factories Inside of Components

[Factories are common software design patterns](https://en.wikipedia.org/wiki/Factory_(object-oriented_programming)) that allow you

to create multiple instances of a component.

To minimize the blast radius of changes and maintain fast plan/apply cycles, do not embed factories within components that

provision lists of resources.

Examples of anti-patterns include:

- Reading a configuration file inside of Terraform to create multiple Buckets

- Using a `for_each` loop to create multiple DNS records from a variable input

(you may hit rate limits when you zones get large enough; it's happened to us)

Instead, leverage [Stack configurations to serve as factories](/core-concepts/stacks) for provisioning multiple component instances.

This approach keeps the state isolated and scales efficiently with the increasing number of component instances.

Please note, it's perfectly fine to use `for_each` loops sometimes to provision groups of resources, just use them with moderation

and be aware of the potential downsides, such as creating massive states with a wide blast radius. For example, maybe you can safely manage a collection of resources this way.

:::note Do as we say, not as we do

It is with humility that we state this best practice. Even many of our own Cloud Posse components, do not follow this because

they were written before we realized the overwhelming benefits of this approach.

:::

## Use Components Inside of Factories

Google discusses the "factories" approach in the post [Resource Factories: A descriptive approach to Terraform](https://medium.com/google-cloud/resource-factories-a-descriptive-approach-to-terraform-581b3ebb59c). This concept is familiar to every major programming framework, and you can apply it to Terraform too.

However, unlike Google's approach of creating the factory inside the component ([which we don't recommend](#avoid-creating-factories-inside-of-components)), we suggest using the stack configuration as the factory and the component as the product.

By following this method, you create a single component for a specific purpose, such as a VPC, database, or Kubernetes cluster. Then, you can instantiate multiple instances of that component in your stack configuration.

In the factory pattern, the component acts like the "factory class," and when defined in the stack configuration, it is used to create and configure multiple component instances.

A component provides specific functionality but is not responsible for its own instantiation or configuration; this responsibility is delegated to the factory.

This approach decouples your architecture from the configuration, resulting in smaller state files and independent lifecycle management for each instance. Most importantly, it maximizes the reusability of your components.

## Use Component Libraries & Vendoring

Utilize a centralized [component library](/core-concepts/components/library) to distribute and share components across the

organization efficiently. This approach enhances discoverability by centralizing where components are stored, preventing

sprawl, and ensuring components are easily accessible to everyone. Employ vendoring to retrieve remote dependencies, like

components, ensuring the practice of immutable infrastructure.

## Organize Related Components with Folders

Organize multiple related components in a common folder. Use nested folders as necessary, to logically group components.

For example, by grouping components by cloud provider and layer (e.g. `components/terraform/aws/network/`)

## Document Component Interfaces and Usage

Utilize tools such as [terraform-docs](https://terraform-docs.io) to thoroughly document the input variables and outputs

of your component. Include snippets of stack configuration to simplify understanding for developers on integrating the component

into their stack configurations. Providing examples that cover common use-cases of the component is particularly effective.

## Version Components for Breaking Changes

Use versioned folders within the component to delineate major versions (e.g. `/components/terraform//v1/`)

## Use a Monorepo for Your Components

For streamlined development and simplified dependency management, smaller companies should consolidate stacks and components

in a single monorepo, facilitating easier updates and unified versioning. Larger companies and enterprises with multiple monorepos

can benefit from a central repository for upstream components, and then use vendoring to easily pull in these shared components to

team-specific monorepos.

## Maintain Loose Coupling Between Components

Avoid directly invoking one component from within another to ensure components remain loosely coupled. Specifically for Terraform

components (root modules), this practice is unsupported due to the inability to define a backend in a child module, potentially

leading to unexpected outcomes. It's crucial to steer clear of this approach to maintain system integrity.

## Reserve Code Generation as an Escape Hatch for Emergencies

We generally advise against using code generation for application logic (components), because it's challenging to ensure good test

coverage (e.g. with `terratest`) and no one likes to code review machine-generated boilerplate in Pull Requests.

If you find yourself in a situation that seems to require code generation, take a step back and consider if that's the right approach.

- Do not code generate providers to [overcome "limitations" in Terraform](https://github.com/hashicorp/terraform/issues/19932#issuecomment-1817043906),

for example, to iterate over providers. This is a red flag. Instead, architect your components to work with a single provider

- If you are programmatically combining several child modules, consider if they should instead be separated by lifecycle.

When you follow these rules, root modules become highly reusable, and you reduce the amount of state managed by a single component,

and therefore, the blast radius of changes.

## Separate Your State by Region

For Disaster Recovery purposes, always strive to keep the state of your components separate by region.

You don't want a regional outage to affect your ability to manage infrastructure in other regions.

## Limit Providers to One or Two Per Component

Avoid using multiple providers in a single component, as it reduces the reusability of the component and increases

the complexity and blast radius of what it manages.

Consider instead "hub" and "spoke" models, where each spoke is its own component with its own lifecycle.

In this model, the "spoke" will usually have two providers, one for the current context and one for the "hub."

---

## Stacks Best Practices

import Intro from '@site/src/components/Intro'

Here are some essential best practices to follow when designing the Stack configurations that describe your architectures. These guidelines are intended to help developers and operators think about how they model the configuration of their infrastructure in Atmos, for maximum clarity and long-term maintainability.

> Physics is the law, everything else is a recommendation.

> Anyone can break laws created by people, but I have yet to see anyone break the laws of physics.

> — **Elon Musk**

## Define Factories in Stack Configurations

Avoid creating factories inside of components, which make them overly complicate and succumb to their massive state.

Instead, use stack configurations to serve as factories for provisioning multiple component instances.

This approach keeps the state isolated and scales efficiently with the increasing number of component instances.

## Treat Stack Templates like an Escape Hatch

Apply them carefully and only when necessary. Using templates instead of inheritance can make stack configurations complex

and hard to manage. Be careful using stack templates together with the [factory pattern](#define-factories-in-stack-configurations).

The simplest templates are the best templates. Using variable interpolation is perfectly fine, but avoid using complex logic,

conditionals, and loops in templates. If you find yourself needing to do this, consider if you are solving the problem in the right way.

## Avoid Too Many Levels of Imports

It's very difficult for others to follow relationships when there are too many nested levels and overrides.

:::warning Complexity rashes

**If you have more than (3) levels of imports, you're probably developing a complexity rash.**

Overly DRY configurations can lead to complexity rashes that are difficult to debug and maintain,

and impossible for newcomers to understand.

:::

## Balance DRY Principles with Configuration Clarity

Avoid overly DRY configuration as it leads to complexity rashes. Sometimes repeating configuration is beneficial

for maintenance and clarity.

In recent years, the DevOps industry has often embraced the DRY (Don’t Repeat Yourself) principle to an extreme.

(And Atmos delivers!) While DRY aims to reduce redundancy and improve maintainability by eliminating duplicate code,

overzealous application of this principle leads to complications and rigidity.

DRY is not a panacea. In fact, sometimes a bit of repetition is **beneficial**, particularly when anticipating future

divergence in configurations or functionality. A balance between DRY and WET (Write Everything Twice) can offer more

flexibility, and make it easier to see the entire context in one place without needing to trace through multiple abstractions

or indirections

Here’s why:

1. **Cognitive Load:** The more you strive for DRYness, the more indirection and abstraction layers you introduce.

This makes it harder for developers because they need to navigate through multiple layers of imports and abstractions

to grasp the complete picture.

2. **Plan for Future Divergence:** When initially similar configurations are likely diverge over time,

keeping them separate will make future changes easier.

3. **Premature Optimization:** Over-optimizing for DRYness may be a form of premature optimization. It’s important to recognize

when to prioritize flexibility and clarity over minimal repetition.

## Reserve Code Generation for Stack Configuration

While we generally advise against using code generation for application logic (components), it's beneficial for

creating configurations where appropriate, such as developer environments and SaaS tenants.

These configurations ought to be committed.

Also, consider if you can [use templates](/core-concepts/stacks/templates) instead.

## Use Mixin Pattern for Snippets of Stack Configuration

Employ the [mixin pattern](/core-concepts/stacks/inheritance/mixins) for clarity when there there is brief configuration snippets that are reusable. Steer clear

of minimal stack configurations simply for the sake of DRYness as it frequently leads to too many levels of imports.

## Use YAML Anchors to DRY Configuration

YAML anchors are pretty sweet and you don’t get those with tfvars.

:::important YAML Anchors Gotchas

When you define [YAML anchors](https://yaml.org/spec/1.2.2/#3222-anchors-and-aliases), they can only be used within the scope of the

same file. This is not an Atmos limitation, but how YAML works. For example, do not work together with [imports](/core-concepts/stacks/imports),

where you define an anchor in one stack configuration and try to use it in another.

:::

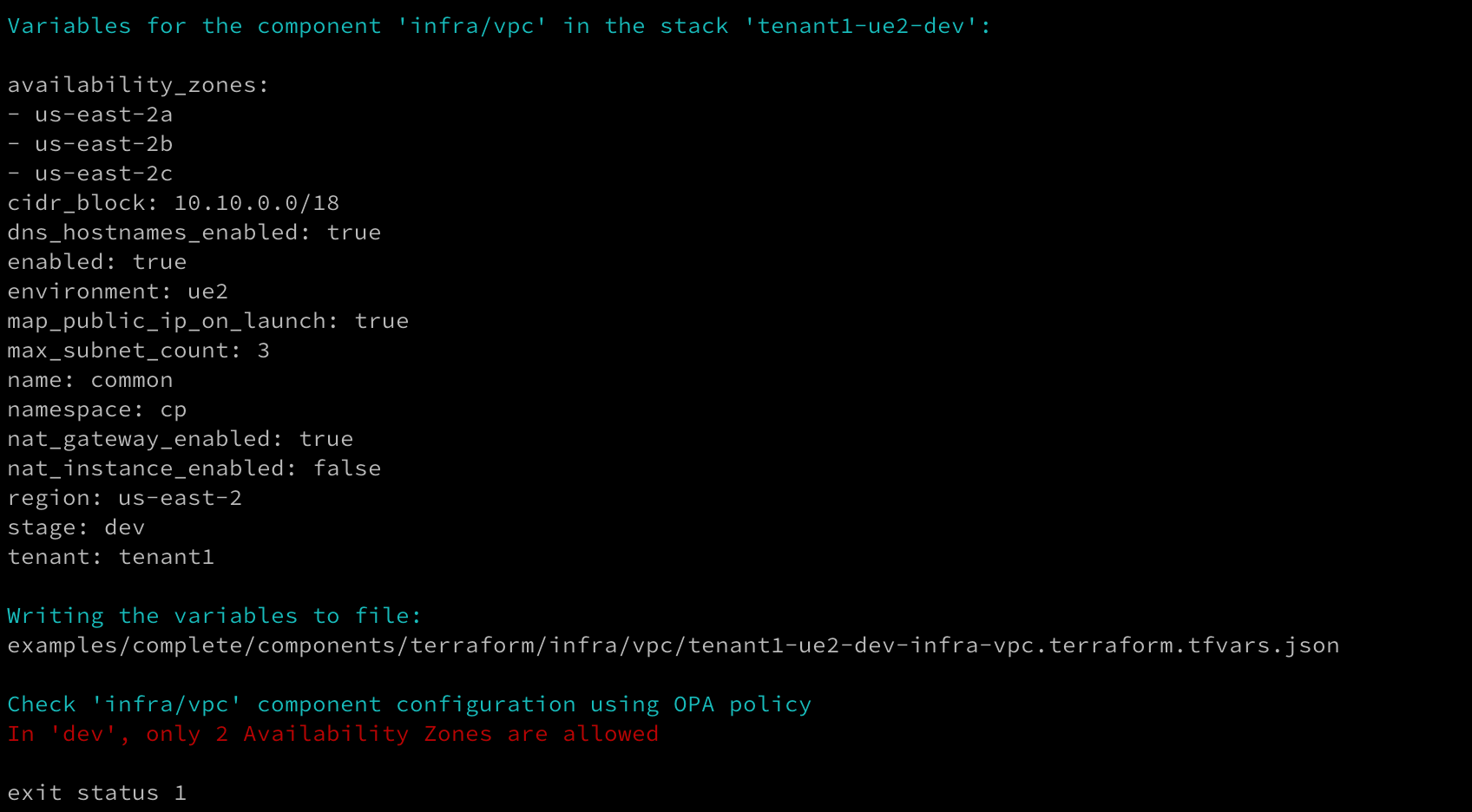

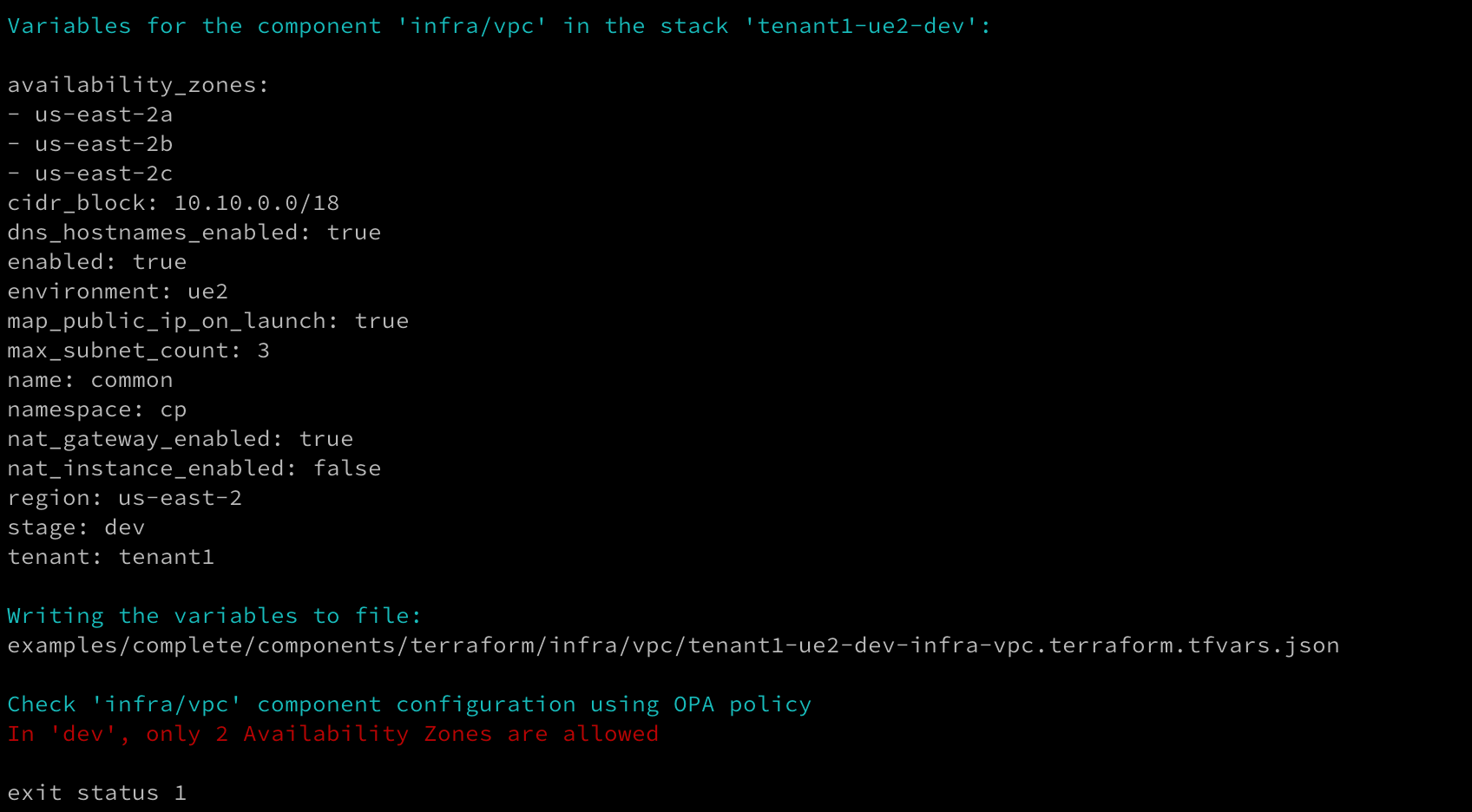

## Enforce Standards using OPA Policies

Apply OPA or JSON Schema validation within stacks to establish policies governing component usage. These policies can be tailored

as needed, allowing the same component to be validated differently depending on its context of use.

---

## Terraform Best Practices with Atmos

import Intro from '@site/src/components/Intro'

These are some of the best practices we recommend when using Terraform with Atmos. They are opinionated and based on our experience working with Terraform and Atmos. When followed, they lead to more reusable and maintainable infrastructure as code.

> Physics is the law, everything else is a recommendation.

> Anyone can break laws created by people, but I have yet to see anyone break the laws of physics.

> — **Elon Musk**

Also, since [Terraform "root modules" are components](/core-concepts/components/terraform), be sure to review the [Component Best Practices](/best-practices/components) for additional guidance on using components with Atmos.

:::tip

[Cloud Posse](https://github.com/cloudposse) publishes their general [Terraform Best Practices](https://docs.cloudposse.com/reference/best-practices/terraform-best-practices/), which are more general and not specific to Atmos.

:::

## Never Include Components Inside of Other Components

We do not recommend consuming one terraform component inside of another as that would defeat the purpose; each component is intended to be a loosely coupled unit of IaC with its own lifecycle.

Furthermore, since components define a state backend and providers, it's not advisable to call one root module from another root module. As only the stack backend of the first root module will be used, leading to unpredictable results.

## Use Terraform Overrides to Extend ("Monkey Patch") Vendored Components

When you need to extend a component, we recommend using [Terraform Overrides](https://developer.hashicorp.com/terraform/language/files/override).

It's essentially a Terraform-native way of [Monkey Patching](https://en.wikipedia.org/wiki/Monkey_patch).

This way, you can maintain the original component as a dependency and only override the parts you need to change.

:::warning Pitfall!

Use this technique cautiously because your overrides may break if the upstream interfaces change. There’s no contract that an upstream component will remain the same.

:::

To gain a deeper understanding of how this works, you have to understand how [Terraform overrides work](https://developer.hashicorp.com/terraform/language/files/override), and then it will make sense how [vendoring with Atmos](/core-concepts/vendor) can be used to extend components.

Comparison to Other Languages or Frameworks

#### Swizzling

In [Objective-C](https://spin.atomicobject.com/method-swizzling-objective-c/) and [Swift-UI](https://medium.com/@pallavidipke07/method-swizzling-in-swift-5c9d9ab008e4), swizzling is the method of changing the implementation of an existing selector.

In Docusaurus, [swizzling a component](https://docusaurus.io/docs/swizzling) means providing an alternative implementation that takes precedence over the component provided by the theme.

#### Monkey Patching

You can think of it also like [Monkey Patching](https://en.wikipedia.org/wiki/Monkey_patch) in [Ruby](http://blog.headius.com/2012/11/refining-ruby.html) or [React components](https://medium.com/@singhalaryan06/monkey-patching-mocking-hooks-and-methods-in-react-f6afef73e423), enabling you to override the default implementation. Gatsby has a similar concept called theme [shadowing](https://www.gatsbyjs.com/docs/how-to/plugins-and-themes/shadowing/).

---

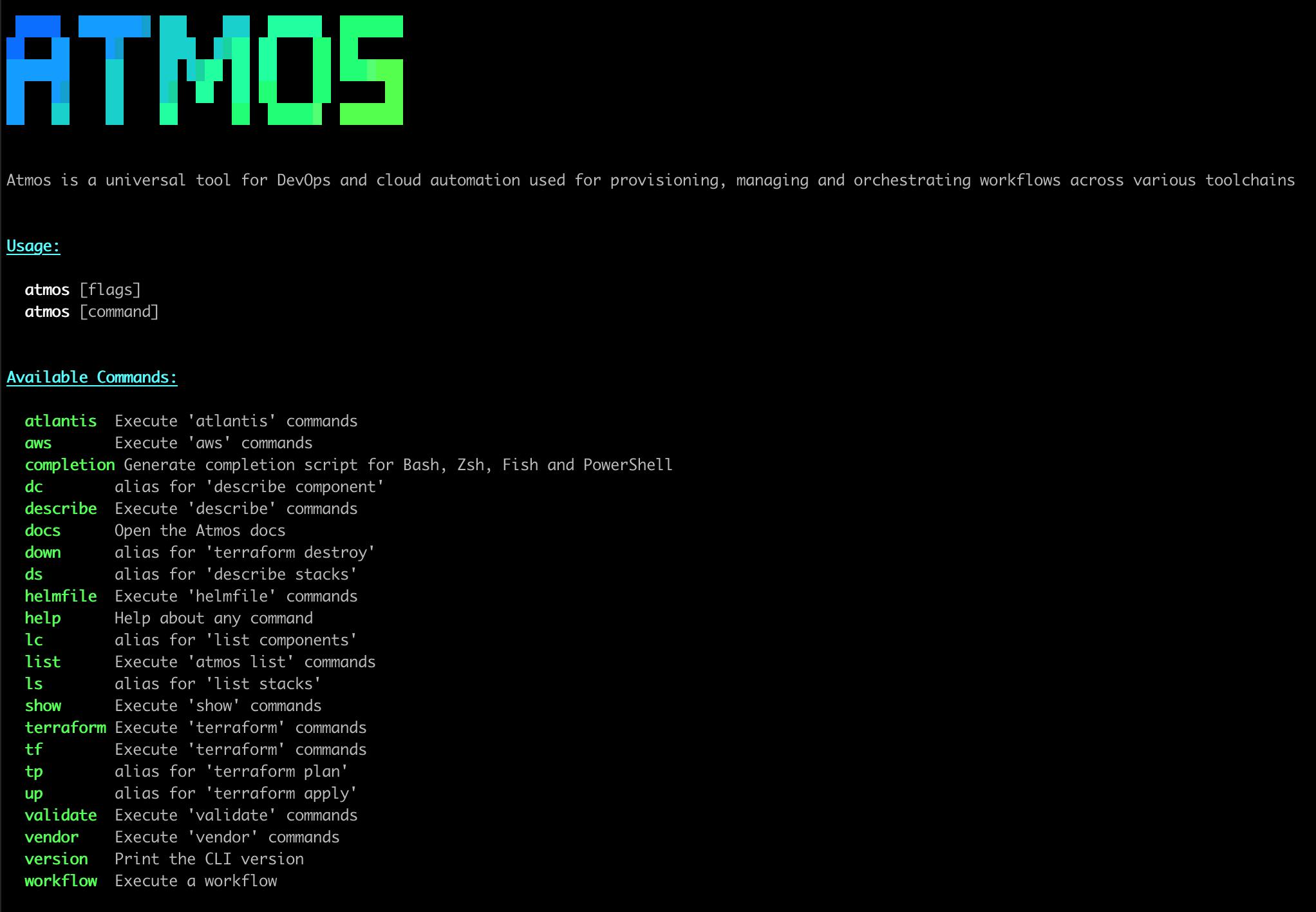

## CLI Commands Cheat Sheet

import Link from '@docusaurus/Link'

import Card from '@site/src/components/Card'

import CardGroup from '@site/src/components/CardGroup'

```

atmos

```

Start an interactive UI to select an Atmos command, component and stack. Press "Enter" to execute the command.

```

atmos help

```

Show help for all Atmos CLI commands

```

atmos docs

```

Open the Atmos documentation in a web browser

```

atmos version

```

Get the Atmos CLI version

```

atmos completion

```

Generate completion scripts for `Bash`, `Zsh`, `Fish` and `PowerShell`

```

atmos describe affected

```

Generate a list of the affected Atmos components and stacks given two Git commits

```

atmos describe component

```

Describe the complete configuration for an Atmos component in an Atmos stack

```

atmos describe config

```

Show the final (deep-merged) CLI configuration of all `atmos.yaml` file(s)

```

atmos describe dependents

```

Show a list of Atmos components in Atmos stacks that depend on the provided Atmos component

```

atmos describe stacks

```

Show the fully deep-merged configuration for all Atmos stacks and the components in the stacks

```

atmos describe workflows

```

Show the configured Atmos workflows

```

atmos terraform

```

Execute `terraform` commands

```

atmos terraform clean

```

Delete the `.terraform` folder, the folder that `TF_DATA_DIR` ENV var points to, `.terraform.lock.hcl` file, `varfile` and `planfile` for a component in a stack

```

atmos terraform deploy

```

Execute `terraform apply -auto-approve` on an Atmos component in an Atmos stack

```

atmos terraform generate backend

```

Generate a Terraform backend config file for an Atmos terraform component in an Atmos stack

```

atmos terraform generate backends

```

Generate the Terraform backend config files for all Atmos terraform components in all stacks

```

atmos terraform generate varfile

```

Generate a varfile (`.tfvar` ) for an Atmos terraform component in an Atmos stack

```

atmos terraform generate varfiles

```

Generate the terraform varfiles (`.tfvar`) for all Atmos terraform components in all stacks

```

atmos terraform shell

```

Start a new `SHELL` configured with the environment for an Atmos component in a stack to allow executing all native terraform commands inside the shell without using any atmos-specific arguments and flags

```

atmos terraform workspace

```

Calculate the Terraform workspace for an Atmos component (from the context variables and stack config), then run `terraform init -reconfigure`, then select the workspace by executing the `terraform workspace select` command

```

atmos helmfile

```

Execute `helmfile` commands

```

atmos helmfile generate varfile

```

Generate a varfile for a helmfile component in an Atmos stack

```

atmos validate component

```

Validate an Atmos component in a stack using JSON Schema and OPA policies

```

atmos validate stacks

```

Validate all Atmos stack configurations

```

atmos vendor pull

```

Pull sources and mixins from remote repositories for Terraform and Helmfile components and other artifacts

```

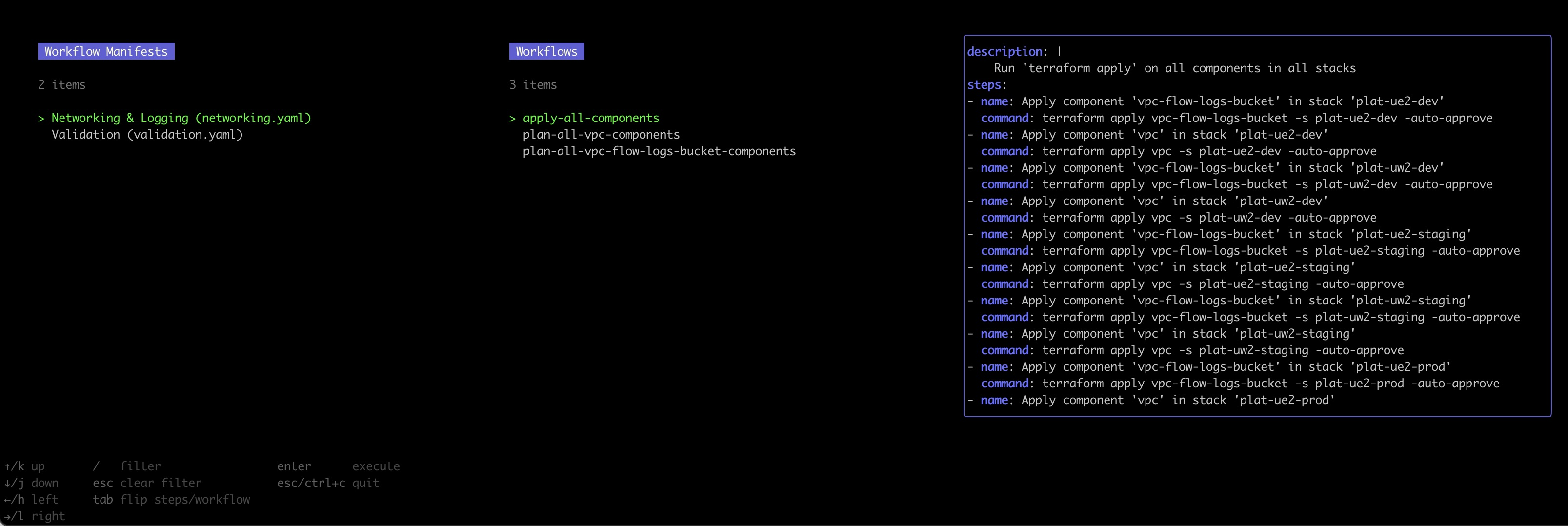

atmos workflow

```

Perform sequential execution of `atmos` and `shell` commands defined as workflow steps

```

atmos aws eks update-kubeconfig

```

Download `kubeconfig` from an EKS cluster and save it to a file

```

atmos atlantis generate repo-config

```

Generates repository configuration for Atlantis

---

## Atmos Cheatsheet

import Link from '@docusaurus/Link'

import Card from '@site/src/components/Card'

import CardGroup from '@site/src/components/CardGroup'

```shell

atmos list stacks

```

```

├── atmos.yaml

├── components

│ └── myapp

│ ├── main.tf

│ ├── outputs.tf

│ └── variables.tf

└── stacks

├── catalog

│ └── myapp.yaml

└── deploy

├── dev.yaml

├── prod.yaml

└── staging.yaml

```

```

import:

- catalog/something

vars:

key: value

components:

terraform:

$component:

vars:

foo: "bar"

```

```

import:

- catalog/something

- path: "catalog/something/else"

context:

key: value

skip_templates_processing: false

ignore_missing_template_values: false

skip_if_missing: false

```

```shell

atmos validate stacks

```

```shell

atmos list components

```

```shell

atmos validate component $component -s $stack

atmos validate component $component -s $stack --schema-type jsonschema --schema-path $component.json

atmos validate component $component -s $stack --schema-type opa --schema-path $component.rego

atmos validate component $component -s $stack --schema-type opa --schema-path $component.rego --module-paths catalog

atmos validate component $component -s $stack --timeout 15

```

```shell

atmos list workflows

```

```shell

atmos terraform plan

```

```shell

atmos terraform apply $component --stack $stack

atmos terraform apply $component --stack $stack -auto-approve

atmos terraform apply $component --stack $stack $planfile

```

```shell

atmos terraform apply

atmos terraform apply $component --stack $stack -out $planfile

atmos terraform apply $component --stack $stack -var "key=value"

```

```shell

atmos describe affected

atmos describe affected --verbose=true

atmos describe affected --ref refs/heads/main

atmos describe affected --ref refs/heads/my-new-branch --verbose=true

atmos describe affected --ref refs/heads/main --format json

atmos describe affected --ref refs/tags/v1.16.0 --file affected.yaml --format yaml

atmos describe affected --sha 3a5eafeab90426bd82bf5899896b28cc0bab3073 --file affected.json

atmos describe affected --sha 3a5eafeab90426bd82bf5899896b28cc0bab3073

atmos describe affected --ssh-key

atmos describe affected --ssh-key --ssh-key-password

atmos describe affected --repo-path

atmos describe affected --include-spacelift-admin-stacks=true

```

---

## Components Cheatsheet

import Link from '@docusaurus/Link'

import Card from '@site/src/components/Card'

import CardGroup from '@site/src/components/CardGroup'

```

├── atmos.yaml

├── components

│ └── myapp

│ ├── main.tf

│ ├── outputs.tf

│ └── variables.tf

└── stacks

├── catalog

│ └── myapp.yaml

└── deploy

├── dev.yaml

├── prod.yaml

└── staging.yaml

```

```shell

atmos list components

```

```shell

atmos validate component $component -s $stack

atmos validate component $component -s $stack --schema-type jsonschema --schema-path $component.json

atmos validate component $component -s $stack --schema-type opa --schema-path $component.rego

atmos validate component $component -s $stack --schema-type opa --schema-path $component.rego --module-paths catalog

atmos validate component $component -s $stack --timeout 15

```

```shell

atmos terraform plan $component --stack $stack

atmos terraform plan $component --stack $stack -out $planfile

```

```shell

atmos terraform apply $component --stack $stack

atmos terraform apply $component --stack $stack -auto-approve

atmos terraform apply $component --stack $stack $planfile

```

```shell

atmos terraform deploy

atmos terraform deploy $component --stack $stack -out $planfile

atmos terraform deploy $component --stack $stack -var "key=value"

```

---

## Stacks Cheatsheet

import Link from '@docusaurus/Link'

import Card from '@site/src/components/Card'

import CardGroup from '@site/src/components/CardGroup'

```

├── atmos.yaml

├── components

│ └── myapp

│ ├── main.tf

│ ├── outputs.tf

│ └── variables.tf

└── stacks

├── catalog

│ └── myapp.yaml

└── deploy

├── dev.yaml

├── prod.yaml

└── staging.yaml

```

```yaml

import:

- catalog/something

vars:

key: value

components:

terraform:

$component:

vars:

foo: "bar"

```

```yaml

terraform:

overrides:

env: {}

settings: {}

vars: {}

command: "opentofu"

```

```yaml

terraform:

components:

$component:

settings:

spacelift:

# The `autodeploy` setting was overridden with the value

# from `terraform.overrides.settings.spacelift.autodeploy`

autodeploy: true

workspace_enabled: true

```

```shell

atmos list components

```

```shell

atmos validate component $component -s $stack

atmos validate component $component -s $stack --schema-type jsonschema --schema-path $component.json

atmos validate component $component -s $stack --schema-type opa --schema-path $component.rego

atmos validate component $component -s $stack --schema-type opa --schema-path $component.rego --module-paths catalog

atmos validate component $component -s $stack --timeout 15

```

---

## Vendoring Cheatsheet

import Card from '@site/src/components/Card'

import CardGroup from '@site/src/components/CardGroup'

```

├── atmos.yaml

├── vendor.yaml

└── components

└── myapp

├── main.tf

├── outputs.tf

└── variables.tf

```

```yaml title="vendor.yaml"

apiVersion: atmos/v1

kind: AtmosVendorConfig

metadata:

name: example-vendor-config

description: Atmos vendoring manifest

spec:

imports:

- "vendor/something"

sources:

- component: "vpc"

source: "oci://public.ecr.aws/cloudposse/components/terraform/stable/aws/vpc:{{.Version}}"

version: "latest"

targets: ["components/terraform/infra/vpc/{{.Version}}"]

included_paths: ["**/*.tf"]

tags:

- test

- networking

```

```yaml title="components/$component/component.yaml"

apiVersion: atmos/v1

kind: ComponentVendorConfig

metadata:

name: vpc-flow-logs-bucket-vendor-config

description: Source and mixins config for vendoring of 'vpc-flow-logs-bucket' component

spec:

source:

uri: github.com/cloudposse/terraform-aws-components.git//modules/vpc-flow-logs-bucket?ref={{.Version}}

version: 1.398.0

included_paths: ["**/*.tf"]

excluded_paths: ["**/context.tf"]

mixins:

- uri: https://raw.githubusercontent.com/cloudposse/terraform-null-label/0.25.0/exports/context.tf

filename: context.tf

```

```shell

atmos vendor pull

atmos vendor pull --everything

atmos vendor pull --component vpc-mixin-1

atmos vendor pull -c vpc-mixin-2

atmos vendor pull -c vpc-mixin-3

atmos vendor pull -c vpc-mixin-4

atmos vendor pull --tags test

atmos vendor pull --tags networking,storage

```

---

## Atmos CLI

import Screengrab from '@site/src/components/Screengrab'

import Terminal from '@site/src/components/Terminal'

import Intro from '@site/src/components/Intro'

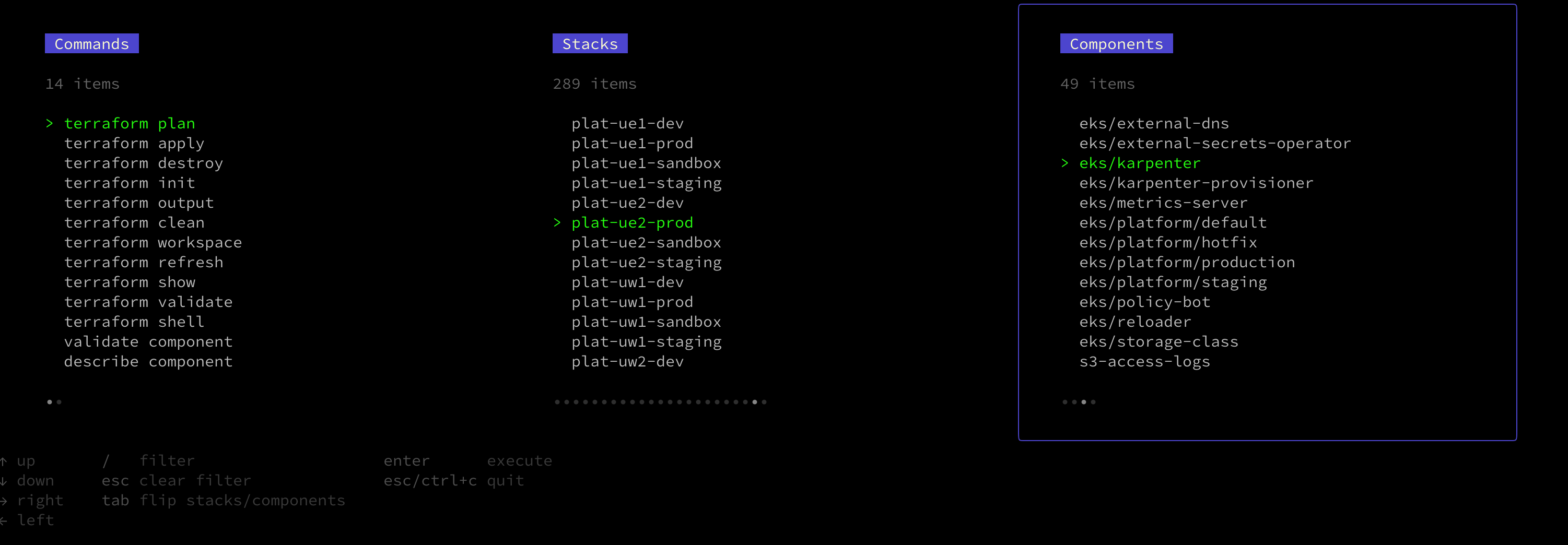

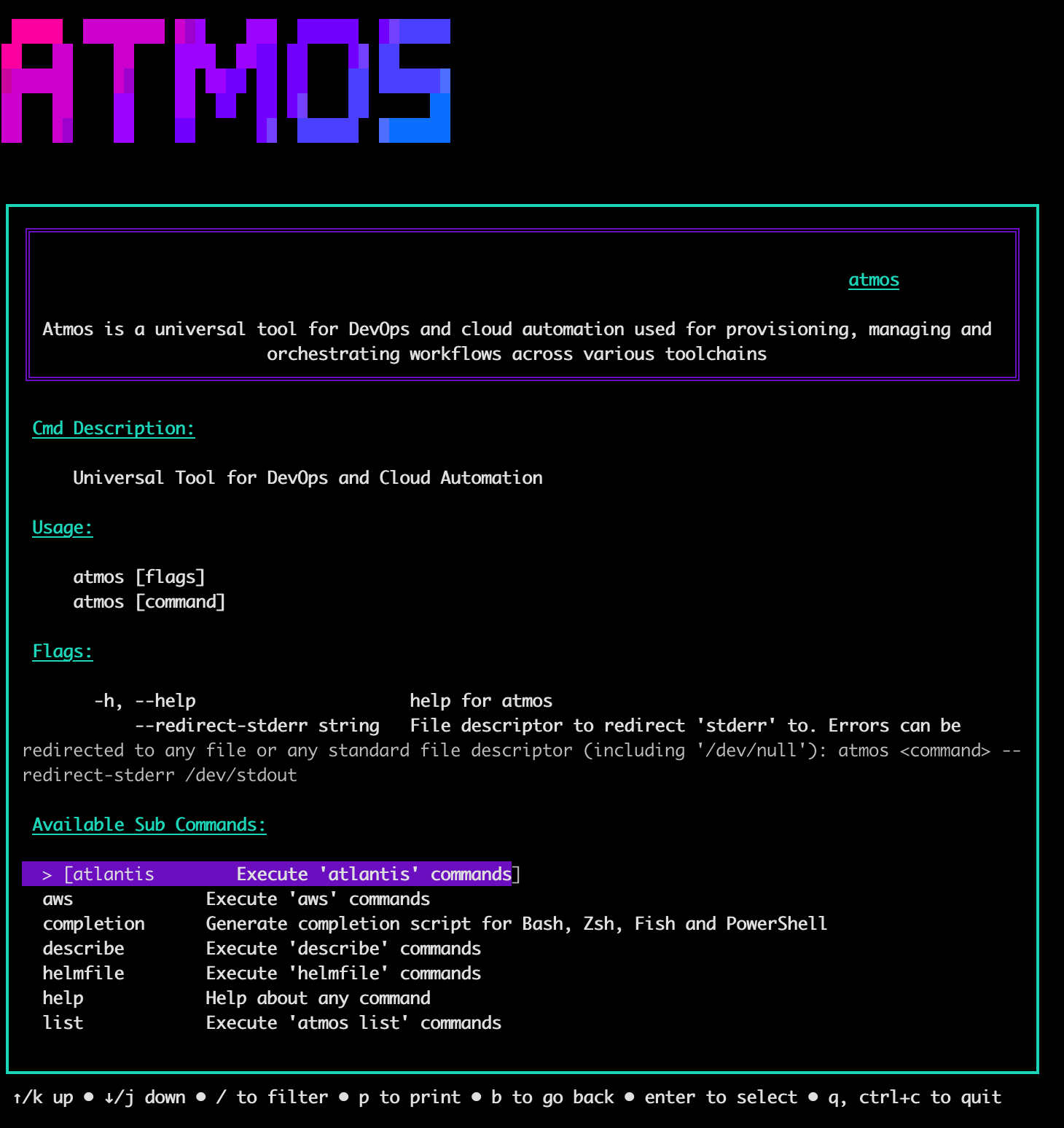

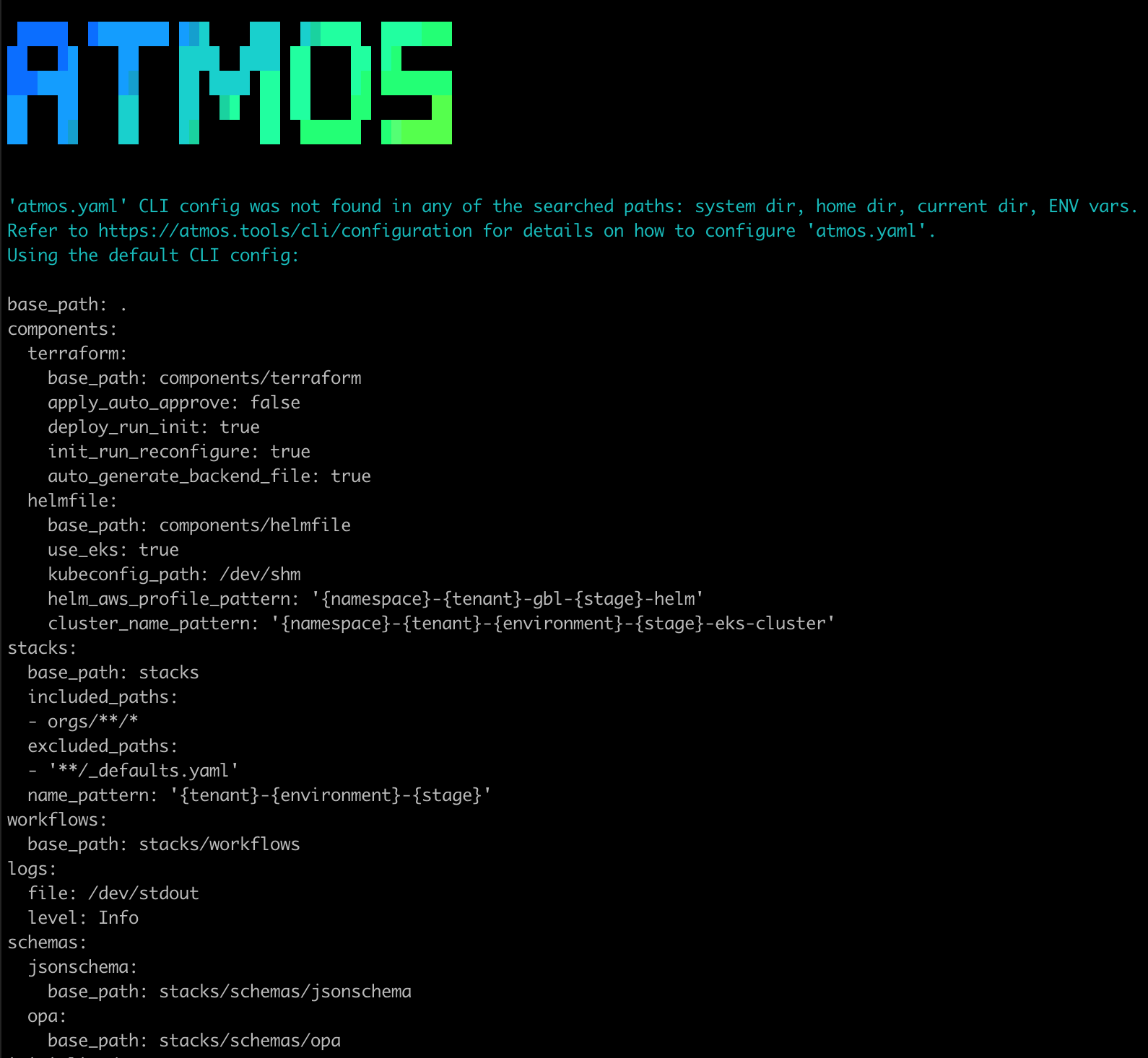

Use this command to start an interactive UI to run Atmos commands against any component or stack. Press `Enter` to execute the command for the selected

stack and component

## Usage

Just run the `atmos` command in your terminal to start the interactive UI. Use the arrow keys to select stacks and components to deploy.

```shell

atmos

```

- Use the `right/left` arrow keys to navigate between the "Commands", "Stacks" and "Components" views

- Use the `up/down` arrow keys (or the mouse wheel) to select a command to execute, component and stack

- Use the `/` key to filter/search for the commands, components, and stacks in the corresponding views

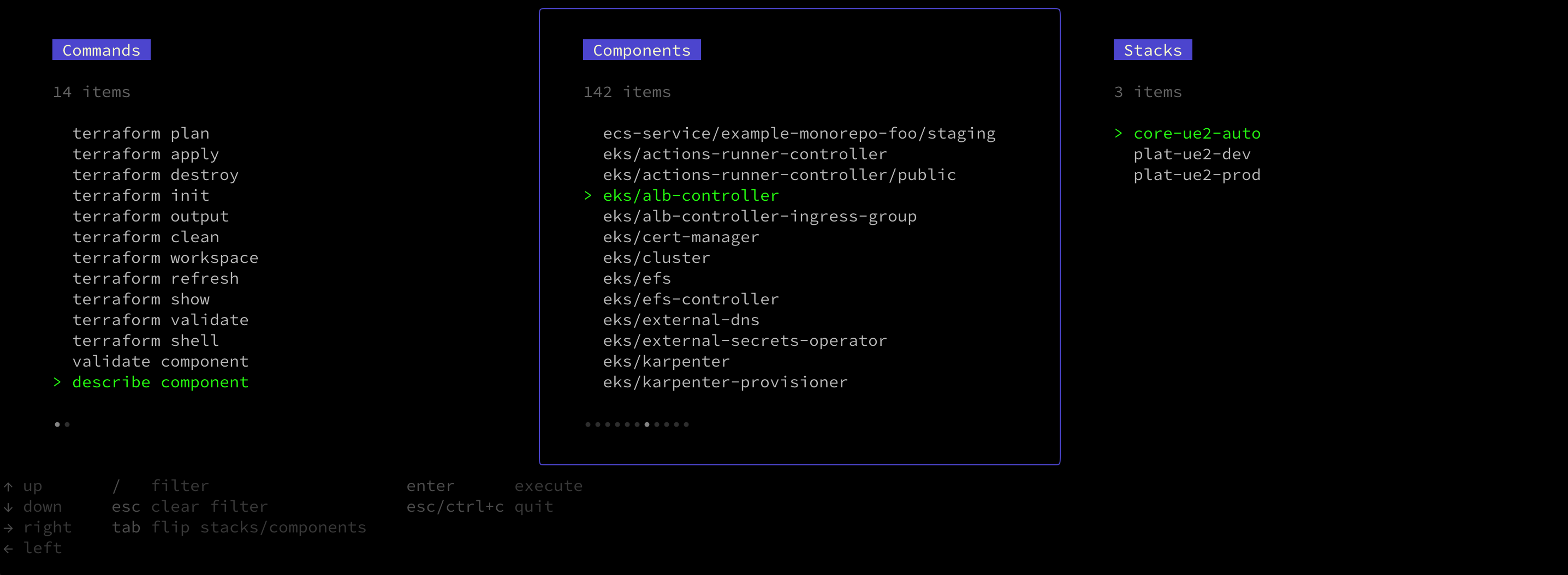

- Use the `Tab` key to flip the "Stacks" and "Components" views. This is useful to be able to use the UI in two different modes:

* `Mode 1: Components in Stacks`. Display all available stacks, select a stack, then show all the components that are defined in the selected stack

* `Mode 2: Stacks for Components`. Display all available components, select a component, then show all the stacks where the selected component is

configured

- Press `Enter` to execute the selected command for the selected stack and component

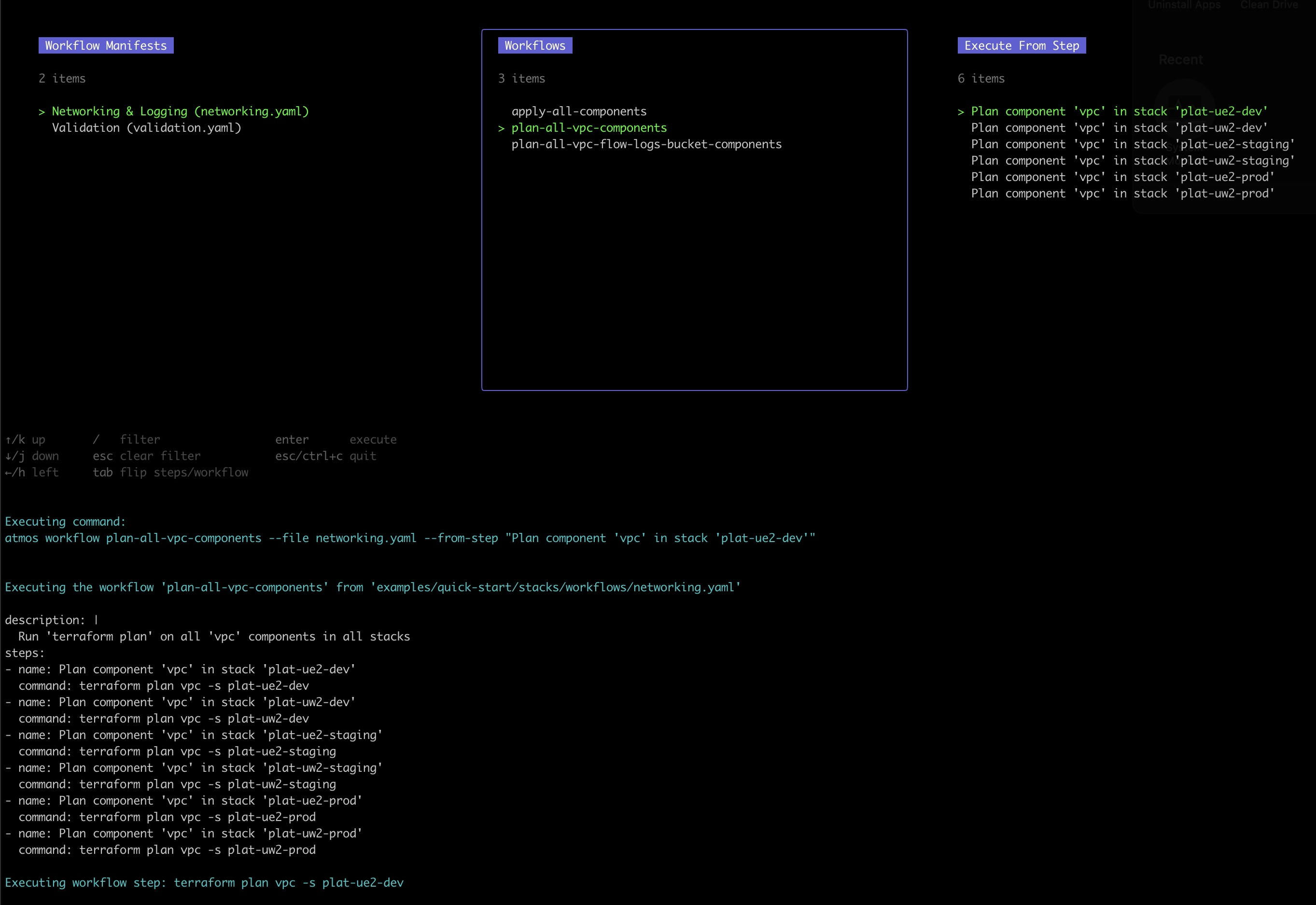

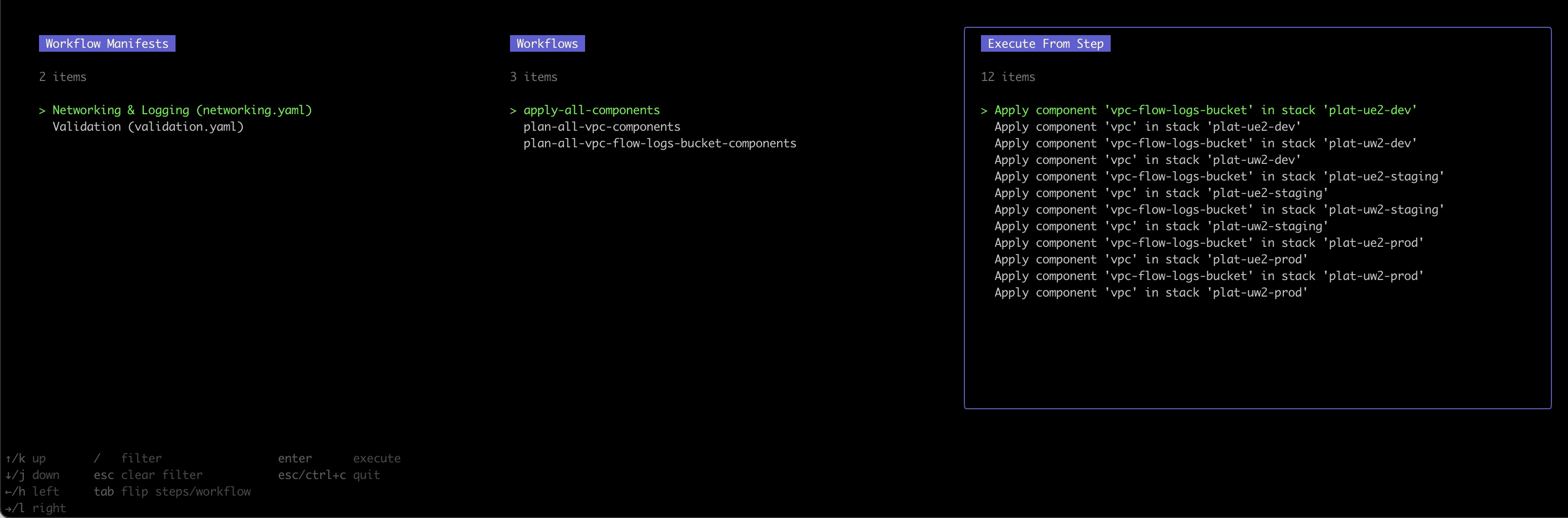

## Screenshots

To get an idea of what it looks like using `atmos` on the command line, just [try our quickstart](/quick-start/) and run the [`atmos`](/cli) command to start

an interactive UI in the terminal. Use the arrow keys to select stacks and components to deploy.

### Components in Stacks (Mode 1)

In Atmos, you can easily search and navigate your configuration from the built-in UI.

### Stacks for Components (Mode 2)

You can also search for the stacks where a component is configured.

---

## atmos atlantis generate repo-config

import Screengrab from '@site/src/components/Screengrab'

import Intro from '@site/src/components/Intro'

Use this command to generate a repository configuration (`atlantis.yaml`) for Atlantis.

```shell

atmos atlantis generate repo-config [options]

```

:::tip

Run `atmos atlantis generate repo-config --help` to see all the available options

:::

## Examples

```shell

atmos atlantis generate repo-config

atmos atlantis generate repo-config --output-path /dev/stdout

atmos atlantis generate repo-config --config-template config-1 --project-template project-1

atmos atlantis generate repo-config --config-template config-1 --project-template project-1 --stacks

atmos atlantis generate repo-config --config-template config-1 --project-template project-1 --components

atmos atlantis generate repo-config --config-template config-1 --project-template project-1 --stacks --components

atmos atlantis generate repo-config --affected-only=true

atmos atlantis generate repo-config --affected-only=true --output-path /dev/stdout

atmos atlantis generate repo-config --affected-only=true --verbose=true

atmos atlantis generate repo-config --affected-only=true --output-path /dev/stdout --verbose=true

atmos atlantis generate repo-config --affected-only=true --repo-path

atmos atlantis generate repo-config --affected-only=true --ref refs/heads/main

atmos atlantis generate repo-config --affected-only=true --ref refs/tags/v1.1.0

atmos atlantis generate repo-config --affected-only=true --sha 3a5eafeab90426bd82bf5899896b28cc0bab3073

atmos atlantis generate repo-config --affected-only=true --ref refs/tags/v1.2.0 --sha 3a5eafeab90426bd82bf5899896b28cc0bab3073

atmos atlantis generate repo-config --affected-only=true --ssh-key

atmos atlantis generate repo-config --affected-only=true --ssh-key --ssh-key-password

atmos atlantis generate repo-config --affected-only=true --clone-target-ref=true

```

## Flags

- `--config-template` (optional)

- Atlantis config template name.

- `--project-template` (optional)

- Atlantis project template name.

- `--output-path` (optional)

- Output path to write `atlantis.yaml` file.

- `--stacks` (optional)

- Generate Atlantis projects for the specified stacks only (comma-separated values).

- `--components` (optional)

- Generate Atlantis projects for the specified components only (comma-separated values).

- `--affected-only` (optional)

- Generate Atlantis projects only for the Atmos components changedbetween two Git commits.

- `--ref` (optional)

- [Git Reference](https://git-scm.com/book/en/v2/Git-Internals-Git-References) with which to compare the current working branch.

- `--sha` (optional)

- Git commit SHA with which to compare the current working branch.

- `--ssh-key` (optional)

- Path to PEM-encoded private key to clone private repos using SSH.

- `--ssh-key-password` (optional)

- Encryption password for the PEM-encoded private key if the key containsa password-encrypted PEM block.

- `--repo-path` (optional)

- Path to the already cloned target repository with which to compare the current branch.Conflicts with `--ref`, `--sha`, `--ssh-key` and `--ssh-key-password`.

- `--verbose` (optional)

- Print more detailed output when cloning and checking out the targetGit repository and processing the result.

- `--clone-target-ref` (optional)

- Clone the target reference with which to compare the current branch.`atmos atlantis generate repo-config --affected-only=true --clone-target-ref=true`The flag is only used when `--affected-only=true`If set to `false` (default), the target reference will be checked out insteadThis requires that the target reference is already cloned by Git,and the information about it exists in the `.git` directory.

:::info

Refer to [Atlantis Integration](/integrations/atlantis) for more details on the Atlantis integration in Atmos

:::

---

## atmos atlantis

import DocCardList from '@theme/DocCardList';

import Screengrab from '@site/src/components/Screengrab'

import Intro from '@site/src/components/Intro'

Use these subcommands to execute commands that generate Atlantis configurations.

## Usage

## Subcommands

---

## atmos aws eks update-kubeconfig

import Screengrab from '@site/src/components/Screengrab'

import Intro from '@site/src/components/Intro'

Use this command to download `kubeconfig` from an EKS cluster and save it to a file.

```shell

atmos aws eks update-kubeconfig [options]

```

This command executes `aws eks update-kubeconfig` command to download `kubeconfig` from an EKS cluster and saves it to a file.

The command can execute `aws eks update-kubeconfig` in three different ways:

1. If all the required parameters (cluster name and AWS profile/role) are provided on the command-line, then Atmos executes the command without

requiring the `atmos.yaml` CLI config and context.

For example:

```shell

atmos aws eks update-kubeconfig --profile= --name=

```

1. If `component` and `stack` are provided on the command-line, then Atmos executes the command using the `atmos.yaml` CLI config and stack's context

by searching for the following settings:

- `components.helmfile.cluster_name_pattern` in the `atmos.yaml` CLI config (and calculates the `--name` parameter using the pattern)

- `components.helmfile.helm_aws_profile_pattern` in the `atmos.yaml` CLI config (and calculates the `--profile` parameter using the pattern)

- `components.helmfile.kubeconfig_path` in the `atmos.yaml` CLI config the variables for the component in the provided stack

- `region` from the variables for the component in the stack

For example:

```shell

atmos aws eks update-kubeconfig -s

```

1. Combination of the above. Provide a component and a stack, and override other parameters on the command line.

For example:

```shell

atmos aws eks update-kubeconfig -s --kubeconfig= --region=us-east-1

```

:::info

Refer to [Update kubeconfig](https://docs.aws.amazon.com/cli/latest/reference/eks/update-kubeconfig.html) for more information

:::

:::tip

Run `atmos aws eks update-kubeconfig --help` to see all the available options

:::

## Examples

```shell

atmos aws eks update-kubeconfig -s

atmos aws eks update-kubeconfig --profile= --name=

atmos aws eks update-kubeconfig -s --kubeconfig= --region=

atmos aws eks update-kubeconfig --role-arn

atmos aws eks update-kubeconfig --alias

atmos aws eks update-kubeconfig --dry-run=true

atmos aws eks update-kubeconfig --verbose=true

```

## Arguments

- `component` (optional)

- Atmos component.

## Flags

- `--stack` / `-s` (optional)

- Atmos stack.

- `--profile` (optional)

- AWS profile to use to authenticate to the EKS cluster.

- `--role-arn` (optional)

- AWS IAM role ARN to use to authenticate to the EKS cluster.

- `--name` (optional)

- EKS cluster name.

- `--region` (optional)

- AWS region.

- `--kubeconfig` (optional)

- `kubeconfig` filename to append with the configuration.

- `--alias` (optional)

- Alias for the cluster context name. Defaults to match cluster ARN.

- `--dry-run` (optional)

- Print the merged kubeconfig to stdout instead of writing it to the specified file.

- `--verbose` (optional)

- Print more detailed output when writing the kubeconfig file, including the appended entries.

---

## atmos aws

import Screengrab from '@site/src/components/Screengrab'

import DocCardList from '@theme/DocCardList';

import Intro from '@site/src/components/Intro'

## Subcommands

Use these subcommands to interact with AWS.

---

## Atmos CLI Commands

import Screengrab from '@site/src/components/Screengrab'

import DocCardList from '@theme/DocCardList'

import Intro from '@site/src/components/Intro'

Use these commands to perform operations.

# Commands

---

## atmos completion

import Screengrab from '@site/src/components/Screengrab'

import Tabs from '@theme/Tabs';

import TabItem from '@theme/TabItem';

import Intro from '@site/src/components/Intro'

Use this command to generate completion scripts for `Bash`, `Zsh`, `Fish` and `PowerShell`.

## Usage

Execute the `completion` command like this:

```shell

atmos completion [bash|zsh|fish|powershell]

```

This command generates completion scripts for `Bash`, `Zsh`, `Fish` and `powershell`.

When the generated completion script is loaded into the shell, pressing the tab key twice displays the available commands and the help.

:::tip

Run `atmos completion --help` to see all the available options

:::

## Configuring Your Shell

To enable command completion, you need to configure your shell. The setup process depends on which shell you’re using (e.g., `zsh` or `bash`).

Select your shell below for detailed setup instructions.

## Bash Completion Setup

To enable tab completion for Atmos in Bash, add the following to your `~/.bashrc` or `~/.bash_profile`:

```bash

# Enable Atmos CLI completion

source <(atmos completion bash)

```

After saving the file, apply the changes by running:

```zsh

source ~/.bashrc

```

Now, you can run any `atmos` command, and pressing `` after typing `atmos` will show the available subcommands. The same applies to `--stack` arguments and commands requiring a component (e.g., `atmos terraform plan`).

## Zsh Completion Setup

To enable tab completion for Atmos in `Zsh`, add the following to your `~/.zshrc`:

```zsh

# Initialize Zsh completion system

autoload -Uz compinit && compinit

# Enable Atmos CLI completion

source <(atmos completion zsh)

# Improve completion behavior

zstyle ':completion:*' menu select # Enable menu selection

zstyle ':completion:*' force-list always # Force vertical menu listing

# Ensure the Tab key triggers autocompletion

bindkey '\t' expand-or-complete

```

After saving the file, apply the changes by running:

```zsh

source ~/.zshrc

```

Now, you can run any `atmos` command, and pressing `` after typing `atmos` will show the available subcommands. The same applies to `--stack` arguments and commands requiring a component (e.g., `atmos terraform plan`).

If completions do not work, try regenerating the completion cache:

```zsh

rm -f ~/.zcompdump && compinit

```

:::warning

The Atmos completion script statically completes [custom commands](/core-concepts/custom-commands) based on the Atmos configuration. If completions are generated without this configuration (e.g., outside a project directory), custom commands won’t be included. To ensure accuracy, generate or regenerate the script from the correct working directory. This only affects custom commands. Components, stacks, and built-in commands remain fully dynamic.

:::

### Examples

```shell

atmos completion bash

atmos completion zsh

atmos completion fish

atmos completion powershell

```

You can generate and load the shell completion script for `Bash` by executing the following commands:

```shell

atmos completion bash > /tmp/completion

source /tmp/completion

```

or

```shell

source <(atmos completion bash)

```

## Arguments

- `shell_name` (required)

- Shell name. Valid values are `bash`, `zsh`, `fish` and `powershell`.

:::info

Refer to [Command-line completion](https://en.wikipedia.org/wiki/Command-line_completion) for more details

:::

---

## atmos describe affected

import Terminal from '@site/src/components/Terminal'

import File from '@site/src/components/File'

import Screengrab from '@site/src/components/Screengrab'

import Intro from '@site/src/components/Intro'

Use this command to show a list of the affected Atmos components and stacks given two Git commits.

## Description

The command uses two different Git commits to produce a list of affected Atmos components and stacks.

For the first commit, the command assumes that the current repo root is a Git checkout. An error will be thrown if the

current repo is not a Git repository (the `.git/` folder does not exist or is configured incorrectly).

The second commit can be specified on the command line by using

the `--ref` ([Git References](https://git-scm.com/book/en/v2/Git-Internals-Git-References)) or `--sha` (commit SHA) flags.

The `--sha` takes precedence over the `--ref` flag.

:::tip

If the flags are not provided, the `ref` will be set automatically to the reference to the default branch

(`refs/remotes/origin/HEAD` Git ref, usually the `main` branch).

:::

## How does it work?

The command performs the following:

- If the `--repo-path` flag is passed, the command uses it as the path to the already cloned target repo with which to

compare the current working branch. I this case, the command will not clone and checkout the

target reference, but instead will use the already cloned one to compare the current branch with. In this case, the

`--ref`, `--sha`, `--ssh-key` and `--ssh-key-password` flags are not used, and an error will be thrown if the `--repo-path`

flag and any of the `--ref`, `--sha`, `--ssh-key` or `--ssh-key-password` flags are provided at the same time

- Otherwise, if the `--clone-target-ref=true` flag is specified, the command clones (into a temp directory) the remote

target with which to compare the current working branch. If the `--ref` flag or the commit SHA flag `--sha` are provided,

the command uses them to clone and checkout the remote target. Otherwise, the `HEAD` of the remote origin is

used (`refs/remotes/origin/HEAD` Git ref, usually the `main` branch)

- Otherwise, (if the `--repo-path` and `--clone-target-ref=true` flags are not passed), the command does not clone anything

from the remote origin, but instead just copies the current repo into a temp directory and checks out the target

reference with which to compare the current working branch.

If the `--ref` flag or the commit SHA flag `--sha` are

provided, the command uses them to check out. Otherwise, the `HEAD` of the remote origin is used

(`refs/remotes/origin/HEAD` Git ref, usually the `main` branch).

This requires that the target reference is already cloned by Git, and the information about it exists in

the `.git` directory (in case of using a non-default branch as the target, Git deep clone needs to be executed instead

of a shallow clone).

This is the recommended way to execute the `atmos describe affected` command since it allows

[working with private repositories](#working-with-private-repositories) without providing the SSH credentials

(`--ssh-key` and `--ssh-key-password` flags), since in this case Atmos does not access the remote origin and instead

just checks out the target reference (which is already on the local file system)

- The command deep-merges all stack configurations from both sources: the current working branch and the target reference

- The command searches for changes in the component directories

- The command compares each stack manifest section of the stack configurations from both sources looking for differences

- And finally, the command outputs a JSON or YAML document consisting of a list of the affected components and stacks

and what caused it to be affected

Since Atmos first checks the component folders for changes, if it finds any affected files, it will mark all related

components and stacks as affected. Atmos will then skip evaluating the stacks for differences since it already

knows that they are affected.

:::tip Use our GitHub Action

Our [affected stacks](/integrations/github-actions/affected-stacks) GitHub Action provides a ready-to-go way to run

`describe affected` and produce a GitHub matrix.

:::

## Usage

```shell

atmos describe affected [options]

```

:::tip

Run `atmos describe affected --help` to see all the available options

:::

## Examples

```shell

atmos describe affected

atmos describe affected --verbose=true

atmos describe affected --ref refs/heads/main

atmos describe affected --ref refs/heads/my-new-branch --verbose=true

atmos describe affected --ref refs/heads/main --format json

atmos describe affected --ref refs/tags/v1.16.0 --file affected.yaml --format yaml

atmos describe affected --sha 3a5eafeab90426bd82bf5899896b28cc0bab3073 --file affected.json

atmos describe affected --sha 3a5eafeab90426bd82bf5899896b28cc0bab3073

atmos describe affected --ssh-key

atmos describe affected --ssh-key --ssh-key-password

atmos describe affected --repo-path

atmos describe affected --include-spacelift-admin-stacks=true

atmos describe affected --clone-target-ref=true

atmos describe affected --include-dependents=true

atmos describe affected --include-settings=true

atmos describe affected --stack=plat-ue2-prod

atmos describe affected --upload=true

atmos describe affected --query

atmos describe affected --process-templates=false

atmos describe affected --process-functions=false

atmos describe affected --skip=terraform.output

atmos describe affected --skip=terraform.output --skip=include

atmos describe affected --skip=include,eval

atmos describe affected --exclude-locked

```

# Example Output

```shell

> atmos describe affected --verbose=true

Cloning repo 'https://github.com/cloudposse/atmos' into the temp dir '/var/folders/g5/lbvzy_ld2hx4mgrgyp19bvb00000gn/T/16710736261366892599'

Checking out the HEAD of the default branch ...

Enumerating objects: 4215, done.

Counting objects: 100% (1157/1157), done.

Compressing objects: 100% (576/576), done.

Total 4215 (delta 658), reused 911 (delta 511), pack-reused 3058

Checked out Git ref 'refs/heads/main'

Current HEAD: 7d37c1e890514479fae404d13841a2754be70cbf refs/heads/describe-affected

BASE: 40210e8d365d3d88ac13c0778c0867b679bbba69 refs/heads/main

Changed files:

tests/fixtures/scenarios/complete/components/terraform/infra/vpc/main.tf

internal/exec/describe_affected.go

website/docs/cli/commands/describe/describe-affected.md

Affected components and stacks:

[

{

"component": "infra/vpc",

"component_type": "terraform",

"component_path": "components/terraform/infra/vpc",

"stack": "tenant1-ue2-dev",

"stack_slug": "tenant1-ue2-dev-infra-vpc",

"spacelift_stack": "tenant1-ue2-dev-infra-vpc",

"atlantis_project": "tenant1-ue2-dev-infra-vpc",

"affected": "component"

},

{

"component": "infra/vpc",

"component_type": "terraform",

"component_path": "components/terraform/infra/vpc",

"stack": "tenant1-ue2-prod",

"stack_slug": "tenant1-ue2-prod-infra-vpc",

"spacelift_stack": "tenant1-ue2-prod-infra-vpc",

"atlantis_project": "tenant1-ue2-prod-infra-vpc",

"affected": "component"

},

{

"component": "infra/vpc",

"component_type": "terraform",

"component_path": "components/terraform/infra/vpc",

"stack": "tenant1-ue2-staging",

"stack_slug": "tenant1-ue2-staging-infra-vpc",

"spacelift_stack": "tenant1-ue2-staging-infra-vpc",

"atlantis_project": "tenant1-ue2-staging-infra-vpc",

"affected": "component"

},

{

"component": "top-level-component3",

"component_type": "terraform",

"component_path": "components/terraform/top-level-component1",

"stack": "tenant1-ue2-test-1",

"stack_slug": "tenant1-ue2-test-1-top-level-component3",

"atlantis_project": "tenant1-ue2-test-1-top-level-component3",

"affected": "file",

"file": "tests/fixtures/scenarios/complete/components/terraform/mixins/introspection.mixin.tf"

},

{

"component": "top-level-component3",

"component_type": "terraform",

"component_path": "components/terraform/top-level-component1",

"stack": "tenant1-ue2-test-1",

"stack_slug": "tenant1-ue2-test-1-top-level-component3",

"atlantis_project": "tenant1-ue2-test-1-top-level-component3",

"affected": "folder",

"folder": "tests/fixtures/scenarios/complete/components/helmfile/infra/infra-server"

}

]

```

## Flags

- `--ref` (optional)

-

[Git Reference](https://git-scm.com/book/en/v2/Git-Internals-Git-References) with which to compare the current working branch

- `--sha` (optional)

-

Git commit SHA with which to compare the current working branch

- `--file` (optional)

-

If specified, write the result to the file

- `--format` (optional)

-

Specify the output format: `json` or `yaml` (`json` is default)

- `--ssh-key` (optional)

-

Path to PEM-encoded private key to clone private repos using SSH

- `--ssh-key-password` (optional)

-

Encryption password for the PEM-encoded private key if the key contains a password-encrypted PEM block

- `--repo-path` (optional)

-

Path to the already cloned target repository with which to compare the current branch. Conflicts with `--ref`, `--sha`, `--ssh-key` and `--ssh-key-password`

- `--verbose` (optional)

-

Print more detailed output when cloning and checking out the target Git repository and processing the result

- `--include-spacelift-admin-stacks` (optional)

-

Include the Spacelift admin stack of any stack that is affected by config changes

- `--clone-target-ref` (optional)

-

Clone the target reference with which to compare the current branch.

`atmos describe affected --clone-target-ref=true`

If set to `false` (default), the target reference will be checked out instead.

This requires that the target reference is already cloned by Git, and the information about it exists in the `.git` directory

- `--stack` (optional)

-

Only show results for the specific stack.

`atmos describe affected --stack=plat-ue2-prod`

- `--include-dependents` (optional)

-

Include the dependent components and stacks.

`atmos describe affected --include-dependents=true`

- `--include-settings` (optional)

-

Include the `settings` section for each affected component.

`atmos describe affected --include-settings=true`

- `--query` (optional)

-

Query the results of the command using YQ expressions.

`atmos describe affected --query=`

For more details, refer to [YQ - a lightweight and portable command-line YAML processor](https://mikefarah.gitbook.io/yq)

- `--process-templates` (optional)

-

Enable/disable processing of `Go` templates in Atmos stacks manifests when executing the command.

If the flag is not provided, it's set to `true` by default.

`atmos describe affected --process-templates=false`

- `--process-functions` (optional)

-

Enable/disable processing of Atmos YAML functions in Atmos stacks manifests when executing the command.

If the flag is not provided, it's set to `true` by default.

`atmos describe affected --process-functions=false`

- `--skip` (optional)

-

Skip processing a specific Atmos YAML function in Atmos stacks manifests when executing the command.

To specify more than one function, use multiple `--skip` flags, or separate the functions with a comma:

`atmos describe affected --skip=terraform.output --skip=include`

`atmos describe affected --skip=terraform.output,include`

- `--exclude-locked` (optional)

-

Exclude the locked components (`metadata.locked: true`) from the output.

Refer to [Locking Components with `metadata.locked`](/core-concepts/stacks/define-components/#locking-components-with-metadatalocked)

`atmos describe affected --exclude-locked`

- `--upload` (optional)

-

Upload the affected components and stacks to a specified HTTP endpoint.

`atmos describe affected --upload=true`

Atmos will perform an HTTP POST request to the URL `${ATMOS_PRO_BASE_URL}/${ATMOS_PRO_ENDPOINT}`,

where the base URL is defined by the `ATMOS_PRO_BASE_URL` environment variable,

and the URL path is defined by the `ATMOS_PRO_ENDPOINT`environment variable

## Output

The command outputs a list of objects (in JSON or YAML format).

Each object has the following schema:

```json

{

"component": "....",

"component_type": "....",

"component_path": "....",

"stack": "....",

"stack_slug": "....",

"spacelift_stack": ".....",

"atlantis_project": ".....",

"affected": ".....",

"affected_all": [],

"file": ".....",

"folder": ".....",

"dependents": [],

"included_in_dependents": "true | false",

"settings": {}

}

```

where:

- `component`

-

The affected Atmos component.

- `component_type`

-

The type of the component (`terraform` or `helmfile`).

- `component_path`

-

The filesystem path to the `terraform` or `helmfile` component.

- `stack`

-

The affected Atmos stack.

- `stack_slug`

-

The Atmos stack slug (concatenation of the Atmos stack and Atmos component).

- `spacelift_stack`

-

The affected Spacelift stack. It will be included only if the Spacelift workspace is enabled for the Atmos component in the

Atmos stack in the `settings.spacelift.workspace_enabled` section (either directly in the component's `settings.spacelift.workspace_enabled` section

or via inheritance).

- `atlantis_project`

-

The affected Atlantis project name. It will be included only if the Atlantis integration is configured in

the `settings.atlantis` section in the stack config. Refer to [Atlantis Integration](/integrations/atlantis) for more details.

- `file`

-

If the Atmos component depends on an external file, and the file was changed,

the `file` attributes shows the modified file.

- `folder`

-

If the Atmos component depends on an external folder, and any file in the folder was changed,

the `folder` attributes shows the modified folder.

- `dependents`

-

A list of components that depend on the current affected component. It will be populated only if the

command-line flag `--include-dependents=true` is passed (to take dependencies into account) and there are other components

that depend on the affected component in the stack.

Refer to [`atmos describe dependents`](/cli/commands/describe/dependents) for more details. The `dependents` property is

hierarchical - each component in the list will also contain a `dependents` property if that component has dependent

components as well.

- `settings`

-

The `settings` section of the component in the stack. It will be included only if the

command-line flag `--include-settings=true` is passed. The `settings` sections is a free-form map used to pass

configuration information to [integrations](/integrations).

- `included_in_dependents`

-

A boolean flag indicating if the affected component in the stack is also present in any of the `dependents`

properties of the other affected components. It will be included only if the command-line flag `--include-dependents=true`

is passed. If `included_in_dependents` is set to `true`, it indicates that the affected component in the stack is also

present in any of the `dependents` lists in the dependency hierarchy of the other affected components.

This flag can be used to decide whether to plan/apply the affected component - you might skip planning/applying the component

since it's also a dependency of another affected component and will be triggered in the dependency order of the other

affected component.

- `affected`

-

Shows the first (in the processing order) section that was changed. The possible values are:

- `stack.vars`

-

The `vars` component section in the stack config has been modified.

- `stack.env`

-

The `env` component section in the stack config has been modified.

- `stack.settings`

-

The `settings` component section in the stack config has been modified.

- `stack.metadata`

-

The `metadata` component section in the stack config has been modified.

- `component`

-

The Terraform or Helmfile component that the Atmos component provisions has been changed.

- `component.module`

-

The Terraform component is affected because it uses a local Terraform module (not from the Terraform registry, but from the

local filesystem), and that local module has been changed.

For example, let's suppose that we have a catalog of reusable Terraform modules in the `modules` folder (outside the `components` folder), and

we have defined the following `label` Terraform module in `modules/label`:

```hcl title="modules/label"

module "label" {

source = "cloudposse/label/null"

version = "0.25.0"

context = module.this.context

}

output "label" {

value = module.label

description = "Label outputs"

}

```

We then use the Terraform module in the `components/terraform/top-level-component1` component:

```hcl title="components/terraform/top-level-component1"

module "service_2_label" {

source = "../../../modules/label"

context = module.this.context

}

output "service_2_id" {

value = module.service_2_label.label.id

description = "Service 2 ID"

}

```

The `label` module is not in the stack config of the `top-level-component1` component (not in the YAML stack config files), but Atmos

understands Terraform dependencies (using a Terraform parser from HashiCorp), and can automatically detect any changes to the module.

For example, if you make changes to any files in the folder `modules/label`, Atmos will detect the module changes, and since the module is a

Terraform dependency of the `top-level-component1` component, Atmos will mark the component as affected with the `affected` attribute

set to `component.module`:

```json

[

{

"component": "top-level-component1",

"component_type": "terraform",

"component_path": "tests/fixtures/scenarios/complete/components/terraform/top-level-component1",

"stack": "tenant1-ue2-staging",

"stack_slug": "tenant1-ue2-staging-top-level-component1",

"spacelift_stack": "tenant1-ue2-staging-top-level-component1",

"atlantis_project": "tenant1-ue2-staging-top-level-component1",

"affected": "component.module",

"affected_all": [

"component.module"

]

},

{

"component": "top-level-component1",

"component_type": "terraform",

"component_path": "tests/fixtures/scenarios/complete/components/terraform/top-level-component1",

"stack": "tenant2-ue2-staging",

"stack_slug": "tenant2-ue2-staging-top-level-component1",

"spacelift_stack": "tenant2-ue2-staging-top-level-component1",

"atlantis_project": "tenant2-ue2-staging-top-level-component1",

"affected": "component.module",

"affected_all": [

"component.module"

]

}

]

```

- `stack.settings.spacelift.admin_stack_selector`

-

The Atmos component for the Spacelift admin stack.

This will be included only if all of the following is true:

- The `atmos describe affected` is executed with the `--include-spacelift-admin-stacks=true` flag

- Any of the affected Atmos components has configured the section `settings.spacelift.admin_stack_selector` pointing to the Spacelift admin

stack that manages the components.

For example:

```yaml title="stacks/orgs/cp/tenant1/_defaults.yaml"

settings:

spacelift:

# All Spacelift child stacks for the `tenant1` tenant are managed by the

# `tenant1-ue2-prod-infrastructure-tenant1` Spacelift admin stack.

# The `admin_stack_selector` attribute is used to find the affected Spacelift

# admin stack for each affected Atmos stack

# when executing the command

# `atmos describe affected --include-spacelift-admin-stacks=true`

admin_stack_selector:

component: infrastructure-tenant1

tenant: tenant1

environment: ue2

stage: prod

```

- The Spacelift admin stack is enabled by `settings.spacelift.workspace_enabled` set to `true`.

For example:

```yaml title="stacks/catalog/terraform/spacelift/infrastructure-tenant1.yaml"

components:

terraform:

infrastructure-tenant1:

metadata:

component: spacelift

inherits:

- spacelift-defaults

settings:

spacelift:

workspace_enabled: true

```

- `file`

-

An external file on the local filesystem that the Atmos component depends on was changed.

Dependencies on external files (not in the component's folder) are defined using the `file` attribute in the `settings.depends_on` map.

For example:

```yaml title="stacks/catalog/terraform/top-level-component3.yaml"

components:

terraform:

top-level-component3:

metadata:

component: "top-level-component1"

settings:

depends_on:

1:

file: "tests/fixtures/scenarios/complete/components/terraform/mixins/introspection.mixin.tf"

```

In the configuration above, we specify that the Atmos component `top-level-component3` depends on the file

`tests/fixtures/scenarios/complete/components/terraform/mixins/introspection.mixin.tf` (which is not in the component's folder). If the file gets modified,

the component `top-level-component3` will be included in the `atmos describe affected` command output.

For example:

```json

[

{

"component": "top-level-component3",

"component_type": "terraform",

"component_path": "components/terraform/top-level-component1",

"stack": "tenant1-ue2-test-1",

"stack_slug": "tenant1-ue2-test-1-top-level-component3",

"atlantis_project": "tenant1-ue2-test-1-top-level-component3",

"affected": "file",

"affected_all": [

"file"

],

"file": "tests/fixtures/scenarios/complete/components/terraform/mixins/introspection.mixin.tf"

}

]

```

- `folder`

-

Any file in an external folder that the Atmos component depends on was changed.

Dependencies on external folders are defined using the `folder` attribute in the `settings.depends_on` map.

For example:

```yaml title="stacks/catalog/terraform/top-level-component3.yaml"

components:

terraform:

top-level-component3:

metadata:

component: "top-level-component1"

settings:

depends_on:

1:

file: "tests/fixtures/scenarios/complete/components/terraform/mixins/introspection.mixin.tf"

2:

folder: "tests/fixtures/scenarios/complete/components/helmfile/infra/infra-server"

```

In the configuration above, we specify that the Atmos component `top-level-component3` depends on the folder

`tests/fixtures/scenarios/complete/components/helmfile/infra/infra-server`. If any file in the folder gets modified,

the component `top-level-component3` will be included in the `atmos describe affected` command output.

For example:

```json

[

{

"component": "top-level-component3",

"component_type": "terraform",

"component_path": "components/terraform/top-level-component1",

"stack": "tenant1-ue2-test-1",

"stack_slug": "tenant1-ue2-test-1-top-level-component3",

"atlantis_project": "tenant1-ue2-test-1-top-level-component3",

"affected": "folder",

"affected_all": [

"folder"

],

"folder": "tests/fixtures/scenarios/complete/components/helmfile/infra/infra-server"

}

]

```

- `affected_all`

-

Shows all component sections and attributes that were changed.

For example, if you make changes to the `vars` and `settings` sections of the component `component-1` in the

`nonprod` stack, and execute `atmos describe affected`, you will get the following result:

```json

[

{

"component": "component-1",

"component_type": "terraform",

"stack": "nonprod",

"stack_slug": "nonprod-component-1",

"affected": "stack.vars",

"affected_all": [

"stack.vars",

"stack.settings"

]

}

]

```

If you create a new Terraform/Tofu component, configure a new Atmos component `component-1` in the

`nonprod` stack, and execute `atmos describe affected`, you will get the following result:

```json

[

{

"component": "component-1",

"component_type": "terraform",

"stack": "nonprod",

"stack_slug": "nonprod-component-1",

"affected": "stack.metadata",

"affected_all": [

"component",

"stack.metadata",

"stack.vars",

"stack.env",

"stack.settings"

]

}

]

```

where:

- `affected`

-

Shows that the Atmos component's `metadata` section was changed

(since the component is new and the `metadata` section is the first section that Atmos processes).

- `affected_all`

-

Shows all the affected sections and attributes:

- `component`

-

The Terraform component (Terraform configuration) was affected (since it was just added).

- `stack.metadata`

-

The Atmos component's `metadata` section was changed.

- `stack.vars`

-

The Atmos component's `vars` section was changed.

- `stack.env`

-

The Atmos component's `env` section was changed.

- `stack.settings`

-

The Atmos component's `settings` section was changed.

:::note

[Abstract Atmos components](/design-patterns/abstract-component) (`metadata.type` is set to `abstract`)

are not included in the output since they serve as blueprints for other Atmos components and are not meant to be provisioned.

[Disabled Atmos components](/core-concepts/stacks/define-components/#disabling-components-with-metadataenabled) (`metadata.enabled` is set to `false`)

are also not included in the output since they are explicitly disabled.

:::

## Output Example

```json

[

{

"component": "infrastructure-tenant1",

"component_type": "terraform",

"component_path": "tests/fixtures/scenarios/complete/components/terraform/spacelift",

"stack": "tenant1-ue2-prod",

"stack_slug": "tenant1-ue2-prod-infrastructure-tenant1",

"spacelift_stack": "tenant1-ue2-prod-infrastructure-tenant1",

"atlantis_project": "tenant1-ue2-prod-infrastructure-tenant1",

"affected": "stack.settings.spacelift.admin_stack_selector",

"affected_all": [

"stack.settings.spacelift.admin_stack_selector"

]

},

{

"component": "infrastructure-tenant2",

"component_type": "terraform",

"component_path": "tests/fixtures/scenarios/complete/components/terraform/spacelift",

"stack": "tenant2-ue2-prod",

"stack_slug": "tenant2-ue2-prod-infrastructure-tenant2",

"spacelift_stack": "tenant2-ue2-prod-infrastructure-tenant2",

"atlantis_project": "tenant2-ue2-prod-infrastructure-tenant2",

"affected": "stack.settings.spacelift.admin_stack_selector",

"affected_all": [

"stack.settings.spacelift.admin_stack_selector"

]

},

{

"component": "test/test-component-override-2",

"component_type": "terraform",

"component_path": "components/terraform/test/test-component",

"stack": "tenant1-ue2-dev",

"stack_slug": "tenant1-ue2-dev-test-test-component-override-2",

"spacelift_stack": "tenant1-ue2-dev-new-component",

"atlantis_project": "tenant1-ue2-dev-new-component",

"affected": "stack.vars",

"affected_all": [

"stack.vars"

]

},

{

"component": "infra/vpc",

"component_type": "terraform",

"component_path": "components/terraform/infra/vpc",

"stack": "tenant2-ue2-staging",

"stack_slug": "tenant1-ue2-staging-infra-vpc",

"spacelift_stack": "tenant1-ue2-staging-infra-vpc",

"atlantis_project": "tenant1-ue2-staging-infra-vpc",

"affected": "component",

"affected_all": [

"component"

]

},

{

"component": "test/test-component-override-3",

"component_type": "terraform",

"component_path": "components/terraform/test/test-component",

"stack": "tenant1-ue2-prod",

"stack_slug": "tenant1-ue2-prod-test-test-component-override-3",

"atlantis_project": "tenant1-ue2-prod-test-test-component-override-3",

"affected": "stack.env",

"affected_all": [

"stack.env"

]

},

{

"component": "top-level-component3",

"component_type": "terraform",

"component_path": "components/terraform/top-level-component1",

"stack": "tenant1-ue2-test-1",

"stack_slug": "tenant1-ue2-test-1-top-level-component3",

"atlantis_project": "tenant1-ue2-test-1-top-level-component3",

"affected": "file",

"affected_all": [

"file",

"folder"

]

"file": "tests/fixtures/scenarios/complete/components/terraform/mixins/introspection.mixin.tf"

},

{

"component": "top-level-component3",

"component_type": "terraform",

"component_path": "components/terraform/top-level-component1",

"stack": "tenant1-ue2-test-1",

"stack_slug": "tenant1-ue2-test-1-top-level-component3",

"atlantis_project": "tenant1-ue2-test-1-top-level-component3",

"affected": "folder",

"affected_all": [

"file",

"folder"

]

"folder": "tests/fixtures/scenarios/complete/components/helmfile/infra/infra-server"

}

]

```

## Affected Components with Dependencies

The output of the `atmos describe affected` command can include dependencies for the affected components.

If the command-line flag `--include-dependents=true` is passed to the `atmos describe affected` command, and there are

other components that depend on the affected components in the stack, the command will include a `dependents`

property (list) for each affected component. The `dependents` property is hierarchical - each component in the list will

also contain a `dependents` property if that component has dependent components as well.

For example, suppose that we have the following configuration for the Atmos components `component-1`, `component-2` and

`component-3` in the stack `plat-ue2-dev`:

```yaml

components:

terraform:

component-1:

metadata:

component: "terraform-component-1"

vars: {}

component-2:

metadata:

component: "terraform-component-2"

vars: {}

settings:

depends_on:

1:

component: "component-1"

component-3:

metadata:

component: "terraform-component-3"

vars: {}

settings:

depends_on:

1:

component: "component-2"

```

:::tip

For more details on how to configure component dependencies, refer to [`atmos describe dependents`](/cli/commands/describe/dependents)

:::

In the above configuration, `component-3` depends on `component-2`, whereas `component-2` depends on `component-1`.

If all the components are affected (modified) in the current working branch,

the `atmos describe affected --include-dependents=true` command will produce the following result:

```json

[

{

"component": "component-1",

"stack": "plat-ue2-dev",

"stack_slug": "plat-ue2-dev-component-1",

"included_in_dependents": false,

"dependents": [

{

"component": "component-2",

"stack": "plat-ue2-dev",

"stack_slug": "plat-ue2-dev-component-2",

"dependents": [

{